Research Paper Publication

Note: This publication can be seen here.

Paper Title

Hybrid VGG19 With Combined LSTM Deep Neural Network for Improved Brain Tumor Classification

Authors

N. Naga Uma Mahesh, N. Teja, N. Veena Sri Sai, M. Vijaya Sri

Introduction

Brain tumors present critical diagnostic challenges, given their complex nature and the urgent need for early intervention. Traditional diagnosis, heavily reliant on manual MRI interpretation by radiologists, is time-consuming and susceptible to errors. This research responds to the increasing demand for automated, accurate, and consistent brain tumor classification by leveraging contemporary deep learning techniques.

Motivation

High rates of diagnostic delay, a shortage of skilled medical professionals, and variability in image interpretation often impact treatment for brain tumor patients. The project is motivated by the potential of artificial intelligence (AI) to automate this process, providing reliable tools for clinicians that minimize risk of human error and optimize outcomes.

Literature Review

Earlier work in the field established the effectiveness of convolutional neural network (CNN) architectures like VGG, ResNet, and MobileNet in classifying medical imagery. Hybrid models, such as combining CNNs with feature-fusion and sequence models, have further improved accuracy. Notably, LSTM units can capture sequential dependencies, yielding better predictions for complex datasets.

Proposed Solution

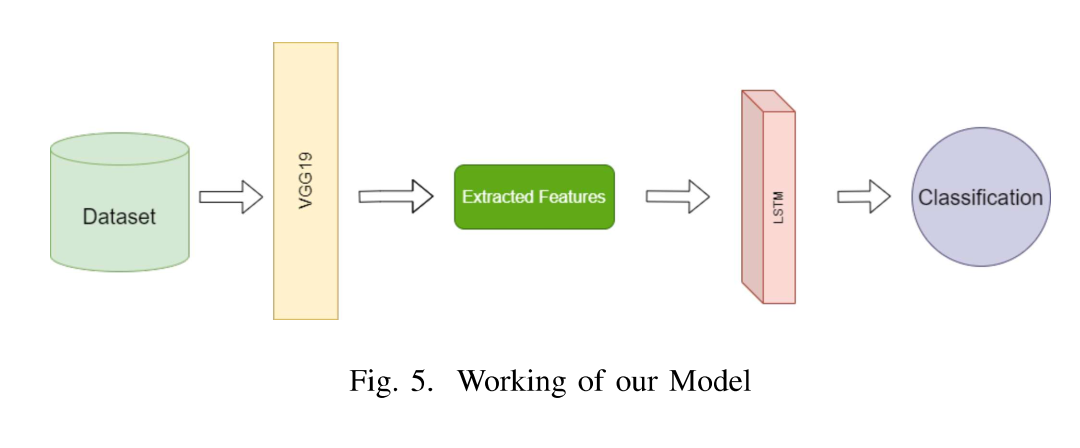

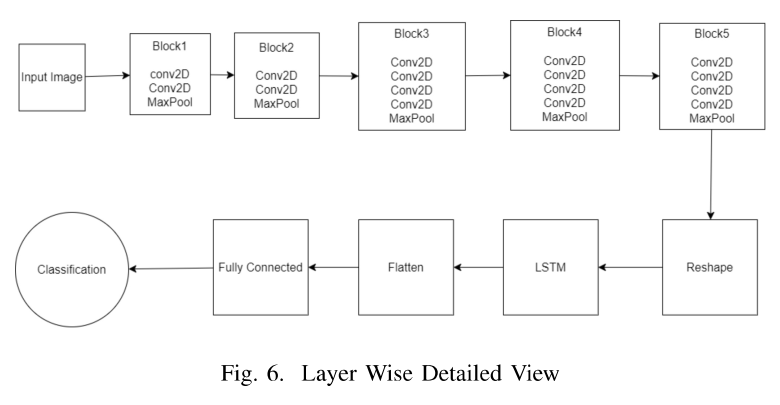

This paper introduces a hybrid model that integrates a pre-trained VGG19 architecture for robust feature extraction with an LSTM layer to retain sequential dependencies in image features. The approach involves:

- Collecting and preprocessing a diverse [Kaggle-based] MRI dataset covering glioma, meningioma, pituitary tumors, and healthy controls.

- Employing data augmentation (rotations, brightness, flips, etc.) to improve generalization.

- Training the hybrid VGG19+LSTM network with class-balanced data and optimizing using Adam, enabling the system to distinguish nuanced tumor patterns.

Methodology

- Preprocessing: Images are resized, normalized, and augmented before training.

- Model: The VGG19 network extracts spatial features; these are passed to an LSTM to capture temporal dependencies across image sections.

- Backend & Deployment: A Flask-based web application enables easy MRI image uploads and real-time prediction for clinical use.

- Testing & Evaluation: The model is evaluated using multiple metrics (accuracy, precision, sensitivity, specificity) with end-to-end unit and integration testing to ensure robustness.

Results

The hybrid VGG19+LSTM model achieved:

- 98% test accuracy, outperforming standalone CNN or LSTM models in both precision and computational speed.

- Significantly lowered inference time, supporting potential real-time clinical applications.

- Generalizability validated via comparison with alternatives in recent literature and through rigorous module-wise and end-to-end testing on new datasets.

Challenges

- Ensuring data consistency given the heterogeneous nature of MRI datasets.

- Balancing model complexity with inference time, so predictions remain fast and resource-efficient.

- Creating an application that is both user-friendly for clinicians and robust under real-world scenarios.

Impact and Significance

- Enables clinicians to rapidly classify tumor types from MRI scans with much higher throughput than manual methods.

- Offers an accessible tool for hospital settings, especially where expert radiologists are scarce.

- Demonstrates the applicability of AI in life-critical diagnosis and supports research in automated medical imaging.

Future Work

- Expansion to multisite, multi-device datasets to further enhance model robustness.

- Integration with cloud-based systems for remote diagnosis and consultation.

- Ongoing optimization to maintain cutting-edge accuracy and minimal computational overhead as new AI techniques emerge.

Would you like to include code snippets, application screenshots, downloadable reports, or further highlight your personal contributions for your portfolio?